The Need for Speed: Demand for Low-Latency Streaming Is High

According to Bitmovin’s “Video Developer Report 2019,” latency was a concern of 54% of all its survey participants. 深入研究数字, subsequent questions revealed that almost 50% of survey participants planned to implement a low-latency technology over the next 1–2 years, with over 50% seeking latency of under 5 seconds and 30% seeking latency of under 1 second (Figure 1).

All of this bodes well for an article on low-latency options, don’t you think? I’ll start with a list of things to know about low-latency technologies, then provide a list of considerations for choosing one.

Figure 1. Latency plans and expectations from Bitmovin’s “Video Developer Report 2019”

WebRTC

Most low-latency solutions use one of three technologies: WebRTC, HTTP自适应流(HAS), 或尚. 根据WebRTC.org FAQ页面, “WebRTC is an open framework for the web that enables Real-Time Communications in the browser.” WebRTC达到 the Candidate Recommendation stage in the World Wide Web Consortium (W3C) standards organization but 还没有最终通过. Still, 根据维基百科, WebRTC is supported by all major desktop browsers on Android, iOS, 铬操作系统, 火狐浏览器操作系统, Tizen 3.0和黑莓10. This means that it should run without downloads on 任何这些平台.

By design, WebRTC was formulated for browser-to-browser communications. 顾名思义, it is a protocol for delivering live streams to each viewer, 点对点或服务器对点. 与此形成鲜明对比的是, HAS-based solutions divide the stream into multiple chunks for the client to download and play. 用于大规模流媒体, WebRTC is typically the engine for an integrated package that includes the encoder, player, 以及交付基础设施.

Examples of WebRTC-based large-scale streaming solutions include 凤凰城实时报道, Limelight实时流媒体, and Millicast 来自CoSMo Software和Influxis. You can also access WebRTC technology in tools like the Wowza流媒体引擎 或者来自 CoSMO软件, although you’d have to create a scalable distribution system for large-scale applications. Latency times for technologies in this class range from 0.5 seconds for 71% of the streams (Real Time) to under 1 second (Limelight实时流媒体).

基础的解决方案

There are multiple HAS-based solutions from multiple vendors, although they operate in many different ways. All of them deploy a form of chunked encoding that breaks the typical 2–6-second segment into chunks that can be downloaded without waiting for the rest of the segment to finish encoding. 这些块显示在底部 Figure 2,这是从一个 Akamai博客文章 威尔·劳.

除了分块编码, these systems adjust the manifest file to signal the availability of the chunks, which are pushed to the origin server via HTTP 1.1 .分块传输编码. 在预期的延迟方面, 劳的后期状态, “If distribution is happening over the open internet (especially over a last-mile mobile network where rapid throughput fluctuations are the norm), current proofs-of-concept show more sustainable Quality of Experience (QoE) with a glass-to-glass latency in the 3s range, 其中1.5s-2s驻留在玩家缓冲区中.”

There are many joint-development efforts around these schemas as well as some standards beyond chunked encoding and HTTP 1.1 .分块传输编码, which have long been standards. One cluster of development was around Low-Latency HLS and the hls.Js开源播放器, which includes contributions from Mux, JW Player, AWS Elemental, and MistServer. There’s also a Digital Video Broadcasting (DVB) specification for 低延迟DASH,有 低延迟的指导方针 来自DASH行业论坛. 很明显, each spec only applies to the designated technology, so you have to implement both to deliver low latency to both HTTP 在线直播 (HLS) and DASH clients.

There are also Common Media Application Format (CMAF) chunk-encoded solutions that allow delivery to both HLS and DASH clients from a single set of files. There are many advantages to low-latency CMAF-based approaches, including legacy player support. That is, 如果玩家不具备低延迟能力, it will simply retrieve and play the segments with normal latency. 除了, 由于文件格式是基于标准的, 当前的DRM技术, 字幕, and advertising insertion should work normally, and the HTTP segments should be cacheable and present no problem for firewalls.

在很大程度上, standards-based approaches like these provide the most ecosystem flexibility, 允许您选择编码器, packager, CDN, and player just like you would for normal latency transmissions.

WebSockets-Based方法

The third approach is typically based on a real-time protocol like WebSockets, 它创建并维护一个 持久连接 between a server and client that either party can use to transmit data. This connection can be used to support both video delivery and other communications, which are convenient if your application needs interactivity.

比如WebRTC的实现, those that use WebSockets are typically offered as a service that includes a player and CDN, and you can use any encoder that can transport streams to the server via RTMP or WebRTC. 例子包括 纳米宇宙的纳米流云 和Wowza 流云与超低延迟. Wowza claims sub-3-second latency for its solution, 而纳米宇宙大约需要1秒, 玻璃对玻璃.

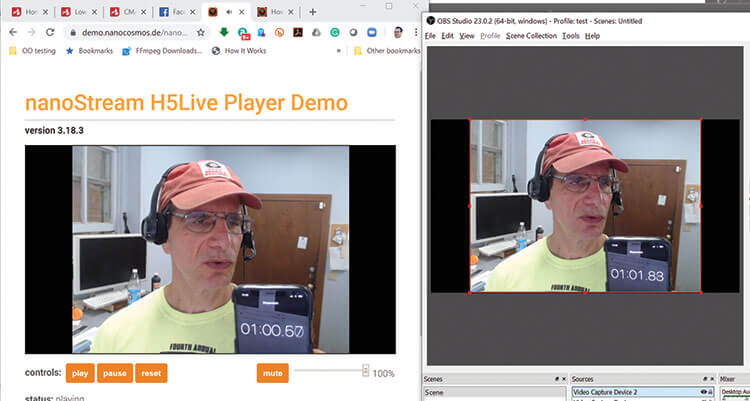

Figure 3 shows a test of the NanoStream cloud in which I’m encoding via OBS on my HP notebook (right window), 将流发送到Nanocosmos服务器, 并在H5Live Player中播放. I’m holding up an iPhone with the timer running, and latency is in the 1.3范围内. Note that iPhones don’t natively support WebSockets, so Nanocosmos created a unique Low-Latency HLS protocol to minimize latency.

Figure 3. Nanocosmos’s WebSockets-based service showed sub-1.延时5秒.

Latency Targets Largely Dictate Choice of Technology

With live streaming, there are three factors to balance: quality, latency, and robustness. You can get any two for an event, but you can’t get all three. 举个例子, the reason out-of-the-box HLS has an approximately 18-second latency is because each segment is 6-seconds long and the Apple default player buffers three segments before starting playback. The benefit is that the viewer will have to suffer a sustained bandwidth event before seeing any degradation. If you cut latency down to 3 seconds via any means, it only takes a short bandwidth blip to stop the stream.

From a technology-selection perspective, there are three levels of latency. 第一个是“无所谓”,” which is a one-to-many presentation with little interaction and no live television. Think of a church service, city council meeting, or even a remote concert. 对于这样的应用程序, 将您的片段大小降低到2-4秒, and you can reduce latency to between about 6–12 seconds with very little risk, 无开发成本, 最小的测试成本. Lower latency isn’t always better if you don’t absolutely need it.

第二层是“剧透时间”,” or the proverbial enthusiast watching TV next door who starts shouting (and tweeting) about a touchdown 30 seconds before you see it. Most broadcast channels average about 5–10 seconds of latency; most of the latency estimates for HTTP-based technologies are in the 2–5-second range, which should comfortably meet this requirement, if not provide streaming with a noticeable advantage over broadcast.

第三个层次是“实时”,” as required by interactive applications like gambling and auctions in which even 2 seconds is too long. 至少在短期内是这样, HTTP-based technologies probably can’t deliver this at scale, so you’ll be looking at a WebRTC- 或尚-based solution.

如果你是一名播音员, 虽然1秒的延迟听起来很棒, WebRTC- and WebSockets-based solutions may have several key limitations. First, you’ll need captions and advertising support, which few services deliver. Second, you may need DRM; although several non-HAS services offer content protection, and forensic watermarking may soon be available, it’s a totally different solution than the CENC-based DRMs used for DASH, HLS, or CMAF. Third, your video quality for a given bitrate may suffer compared to HAS due to certain encoding restraints that may be imposed on some, 但并非全部, WebRTC编码器.

Finally, low-latency HAS services produce content that’s both backward-compatible to players that don’t support low latency and immediately available for DVR or video-on-demand (VOD) delivery. WebRTC- and WebSockets-based systems can make their streams immediately available after the live broadcast for VOD, but not in the traditional adaptive bitrate (ABR) format without transcoding. If you’re broadcasting a single hour a month, the cost to convert the stream for HAS-based VOD (if desired) is negligible; if you’re producing 200 channels of TV each hour, 它加起来很快.

HAS市场正在迅速发展

如前所述, there were multiple groups working toward DASH or HLS-based low-latency solutions. Apple managed to surprise them all when it announced its 低延迟HLS初步规范,一般称为LL-HLS. The spec differs from previous efforts in two key ways. First, it enables transport stream chunks and fragmented MP4 files, whereas DASH only supports the latter (technically, DASH supports transport streams in the spec although it’s seldom, if ever, used). If you use transport stream chunks to deliver low-latency video to HLS, you’ll need a separate stream using fragmented MP4 files to support DASH low-latency output.

相关文章

In the AV world, zero-frame latency isn't just a pipe dream—it's a requirement. Here's why the streaming industry would do well to pay attention.

9月20日2019

NGCodec首席执行官、创始人 & President Oliver Gunasekara breaks down the low-latency landscape for distribution in this clip from a 在线直播 Summit panel at 流媒体 East 2019.

8月14日2019

有时低延迟是至关重要的, but in other streaming applications it's not worth prioritizing, Wowza Senior Solutions Engineer Tim Dougherty argues in this clip from 流媒体 West 2018.

2019年5月6日

It's not a standard yet, but that will likely change. Here's a detailed look at the state of WebRTC, the project that could finally deliver instantaneous video streaming at scale.

4月19日2019

提及的公司及供应商